Sound space exploration using CBCS and AIML

This project, created for the module Data and Machine Learning for Artistic Practice at Goldsmiths University, deals with the problem of sound space exploration. Sound spaces can be defined as a collection of points describing arbitrary sonic attributes. They can be constructed using timbral descriptors of music, audio features of sound files, synthesizer parameters, and many more. The two main use cases for sound spaces are inspection (visualising a collection of sounds) and synthesis (creating new audio from an existing corpus of sounds). This work focuses on the second aspect. I use assisted interactive machine learning to create an interactive way of navigating a grain-based soundspace, allowing users to form novel combinations of existing source material.

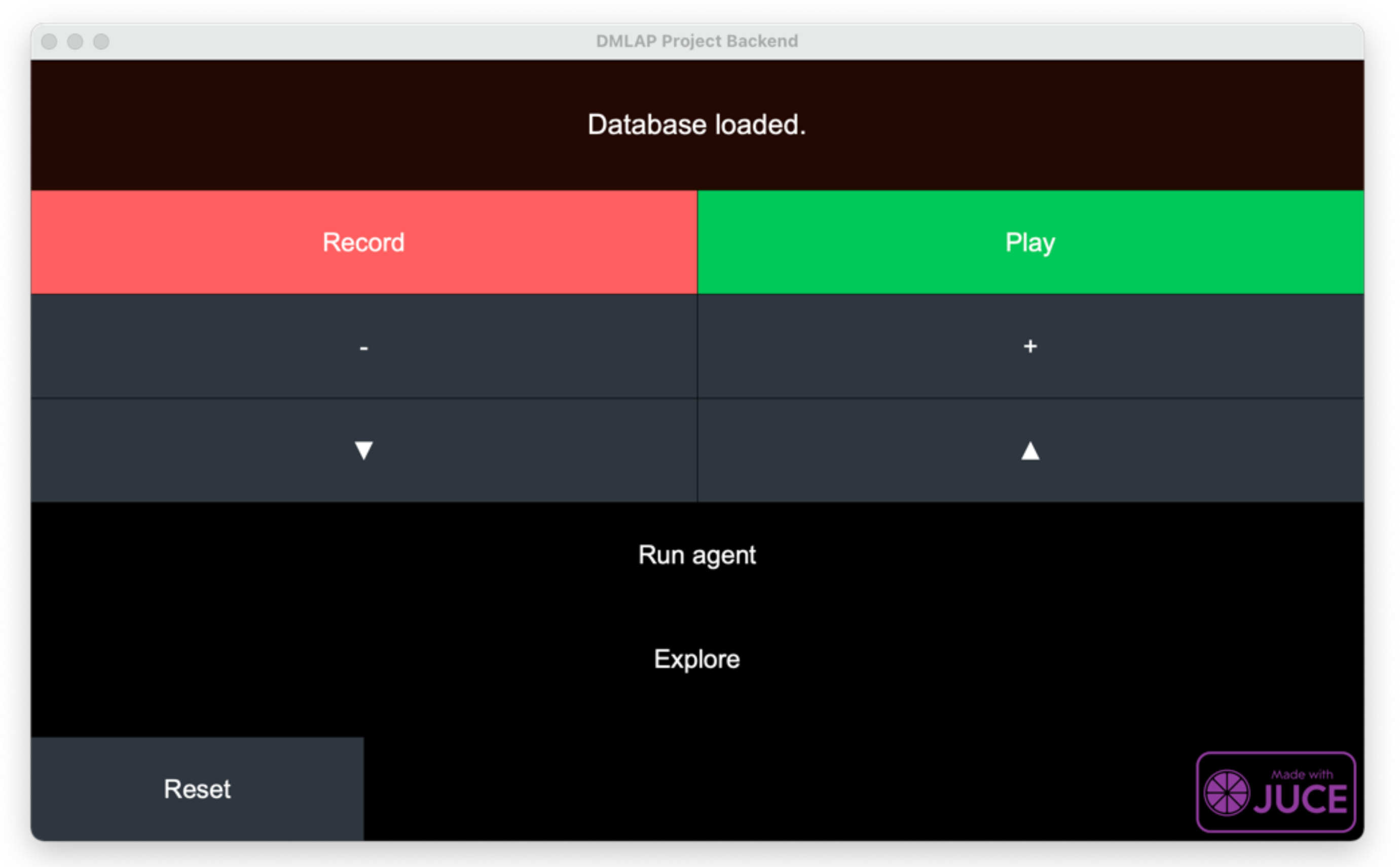

Screenshot

Screenshot

A video explaining and demonstrating the system is available via YouTube.

For an audio demo, skip to 10:00.